您现在的位置是:网站首页> 编程资料编程资料

关于CentOS 8 搭建MongoDB4.4分片集群的问题_MongoDB_

![]() 2023-05-27

395人已围观

2023-05-27

395人已围观

简介 关于CentOS 8 搭建MongoDB4.4分片集群的问题_MongoDB_

一,简介

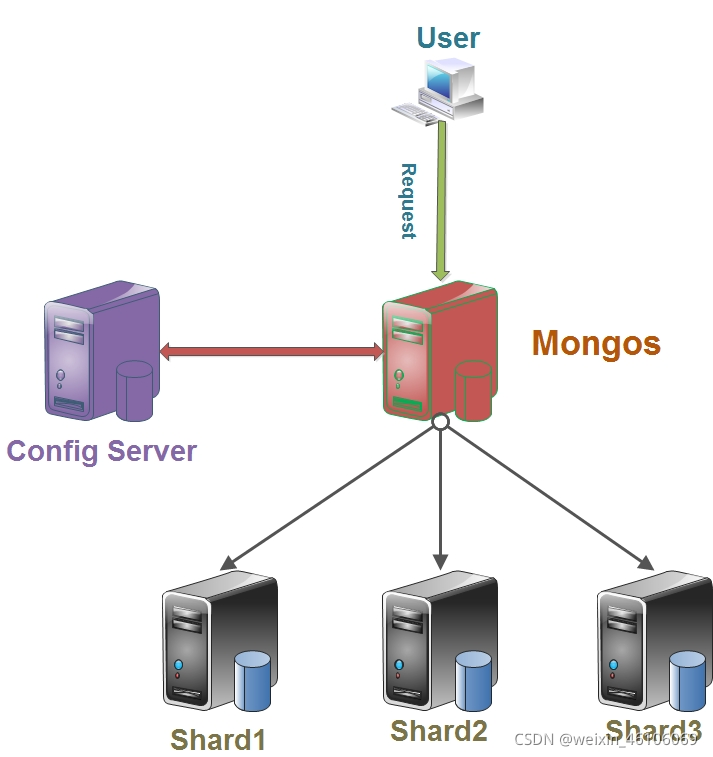

1.分片

在MongoDB里面存在另一种集群,就是分片技术,可以满足MongoDB数据量大量增长的需求。

在MongoDB存储海量数据时,一台机器可能不足以存储数据,也可能不足以提供可接受的读写吞吐量。这时,我们就可以通过在多台机器上分割数据,使得数据库系统能存储和处理更多的数据。

2.为什么使用分片

- 复制所有的写入操作到主节点

- 延迟的敏感数据会在主节点查询

- 单个副本集限制在12个节点

- 当请求量巨大时会出现内存不足

- 本地磁盘不足

- 垂直扩展价格昂贵

3.分片原理概述

分片就是把数据分成块,再把块存储到不同的服务器上,MongoDB的分片是自动分片的,当用户发送读写数据请求的时候,先经过mongos这个路由层,mongos路由层去配置服务器请求分片的信息,再来判断这个请求应该去哪一台服务器上读写数据。

二,准备环境

- 操作系统:CentOS Linux release 8.2.2004 (Core)

- MongoDB版本:v4.4.10

- IP:10.0.0.56 实例:mongos(30000) config(27017) shard1主节点(40001) shard2仲裁节点(40002) shard3副节点(40003)

- IP:10.0.0.57 实例:mongos(30000) config(27017) shard1副节点(40001) shard2主节点(40002) shard3仲裁节点(40003)

- IP:10.0.0.58 实例:mongos(30000) config(27017) shard1仲裁节点(40001) shard3副节点(40002) shard3主节点(40003)

三,集群配置部署

1.创建相应目录(三台服务器执行相同操作)

mkdir -p /mongo/{data,logs,apps,run} mkdir -p /mongo/data/shard{1,2,3} mkdir -p /mongo/data/config mkdir -p /mongo/apps/conf 2.安装MongoDB修改创建配置文件(三台执行相同操作)

安装教程

安装可以通过下载MongoDB安装包,再进行配置环境变量。这里是直接配置yum源,通过yum源安装的MongoDB,后面直接执行mongod加所需配置文件路径运行即可。

(1)mongo-config配置文件

vim /mongo/apps/conf/mongo-config.yml systemLog: destination: file #日志路径 path: "/mongo/logs/mongo-config.log" logAppend: true storage: journal: enabled: true #数据存储路径 dbPath: "/mongo/data/config" engine: wiredTiger wiredTiger: engineConfig: cacheSizeGB: 12 processManagement: fork: true pidFilePath: "/mongo/run/mongo-config.pid" net: #这里ip可以设置为对应主机ip bindIp: 0.0.0.0 #端口 port: 27017 setParameter: enableLocalhostAuthBypass: true replication: #复制集名称 replSetName: "mgconfig" sharding: #作为配置服务 clusterRole: configsvr

(2)mongo-shard1配置文件

vim /mongo/apps/conf/mongo-shard1.yml systemLog: destination: file path: "/mongo/logs/mongo-shard1.log" logAppend: true storage: journal: enabled: true dbPath: "/mongo/data/shard1" processManagement: fork: true pidFilePath: "/mongo/run/mongo-shard1.pid" net: bindIp: 0.0.0.0 #注意修改端口 port: 40001 setParameter: enableLocalhostAuthBypass: true replication: #复制集名称 replSetName: "shard1" sharding: #作为分片服务 clusterRole: shardsvr

(3)mongo-shard2配置文件

vim /mongo/apps/conf/mongo-shard2.yml systemLog: destination: file path: "/mongo/logs/mongo-shard2.log" logAppend: true storage: journal: enabled: true dbPath: "/mongo/data/shard2" processManagement: fork: true pidFilePath: "/mongo/run/mongo-shard2.pid" net: bindIp: 0.0.0.0 #注意修改端口 port: 40002 setParameter: enableLocalhostAuthBypass: true replication: #复制集名称 replSetName: "shard2" sharding: #作为分片服务 clusterRole: shardsvr

(4)mongo-shard3配置文件

vim /mongo/apps/conf/mongo-shard3.yml systemLog: destination: file path: "/mongo/logs/mongo-shard3.log" logAppend: true storage: journal: enabled: true dbPath: "/mongo/data/shard3" processManagement: fork: true pidFilePath: "/mongo/run/mongo-shard3.pid" net: bindIp: 0.0.0.0 #注意修改端口 port: 40003 setParameter: enableLocalhostAuthBypass: true replication: #复制集名称 replSetName: "shard3" sharding: #作为分片服务 clusterRole: shardsvr

(5)mongo-route配置文件

vim /mongo/apps/conf/mongo-route.yml systemLog: destination: file #注意修改路径 path: "/mongo/logs/mongo-route.log" logAppend: true processManagement: fork: true pidFilePath: "/mongo/run/mongo-route.pid" net: bindIp: 0.0.0.0 #注意修改端口 port: 30000 setParameter: enableLocalhostAuthBypass: true replication: localPingThresholdMs: 15 sharding: #关联配置服务 configDB: mgconfig/10.0.0.56:27017,10.0.0.57:27017,10.0.0.58:27018

3.启动mongo-config服务(三台服务器执行相同操作)

#关闭之前yum安装的MongoDB systemctl stop mongod cd /mongo/apps/conf/ mongod --config mongo-config.yml #查看端口27017是否启动 netstat -ntpl Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1129/sshd tcp 0 0 127.0.0.1:631 0.0.0.0:* LISTEN 1131/cupsd tcp 0 0 127.0.0.1:6010 0.0.0.0:* LISTEN 2514/sshd: root@pts tcp 0 0 127.0.0.1:6011 0.0.0.0:* LISTEN 4384/sshd: root@pts tcp 0 0 0.0.0.0:27017 0.0.0.0:* LISTEN 4905/mongod tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 1/systemd tcp6 0 0 :::22 :::* LISTEN 1129/sshd tcp6 0 0 ::1:631 :::* LISTEN 1131/cupsd tcp6 0 0 ::1:6010 :::* LISTEN 2514/sshd: root@pts tcp6 0 0 ::1:6011 :::* LISTEN 4384/sshd: root@pts tcp6 0 0 :::111 :::* LISTEN 1/systemd

4.连接一台实例,创建初始化复制集

#连接mongo mongo 10.0.0.56:27017 #配置初始化复制集,这里的mgconfig要和配置文件里的replSet的名称一致 config={_id:"mgconfig",members:[ {_id:0,host:"10.0.0.56:27017"}, {_id:1,host:"10.0.0.57:27017"}, {_id:2,host:"10.0.0.58:27017"}, ]} rs.initiate(config) #ok返回1便是初始化成功 { "ok" : 1, "$gleStats" : { "lastOpTime" : Timestamp(1634710950, 1), "electionId" : ObjectId("000000000000000000000000") }, "lastCommittedOpTime" : Timestamp(0, 0) } #检查状态 rs.status() { "set" : "mgconfig", "date" : ISODate("2021-10-20T06:24:24.277Z"), "myState" : 1, "term" : NumberLong(1), "syncSourceHost" : "", "syncSourceId" : -1, "configsvr" : true, "heartbeatIntervalMillis" : NumberLong(2000), "majorityVoteCount" : 2, "writeMajorityCount" : 2, "votingMembersCount" : 3, "writableVotingMembersCount" : 3, "optimes" : { "lastCommittedOpTime" : { "ts" : Timestamp(1634711063, 1), "t" : NumberLong(1) }, "lastCommittedWallTime" : ISODate("2021-10-20T06:24:23.811Z"), "readConcernMajorityOpTime" : { "ts" : Timestamp(1634711063, 1), "t" : NumberLong(1) }, "readConcernMajorityWallTime" : ISODate("2021-10-20T06:24:23.811Z"), "appliedOpTime" : { "ts" : Timestamp(1634711063, 1), "t" : NumberLong(1) }, "durableOpTime" : { "ts" : Timestamp(1634711063, 1), "t" : NumberLong(1) }, "lastAppliedWallTime" : ISODate("2021-10-20T06:24:23.811Z"), "lastDurableWallTime" : ISODate("2021-10-20T06:24:23.811Z") }, "lastStableRecoveryTimestamp" : Timestamp(1634711021, 1), "electionCandidateMetrics" : { "lastElectionReason" : "electionTimeout", "lastElectionDate" : ISODate("2021-10-20T06:22:41.335Z"), "electionTerm" : NumberLong(1), "lastCommittedOpTimeAtElection" : { "ts" : Timestamp(0, 0), "t" : NumberLong(-1) }, "lastSeenOpTimeAtElection" : { "ts" : Timestamp(1634710950, 1), "t" : NumberLong(-1) }, "numVotesNeeded" : 2, "priorityAtElection" : 1, "electionTimeoutMillis" : NumberLong(10000), "numCatchUpOps" : NumberLong(0), "newTermStartDate" : ISODate("2021-10-20T06:22:41.509Z"), "wMajorityWriteAvailabilityDate" : ISODate("2021-10-20T06:22:42.322Z") }, "members" : [ { "_id" : 0, "name" : "10.0.0.56:27017", "health" : 1, "state" : 1, "stateStr" : "PRIMARY", "uptime" : 530, "optime" : { "ts" : Timestamp(1634711063, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2021-10-20T06:24:23Z"), "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "", "electionTime" : Timestamp(1634710961, 1), "electionDate" : ISODate("2021-10-20T06:22:41Z"), "configVersion" : 1, "configTerm" : 1, "self" : true, "lastHeartbeatMessage" : "" }, { "_id" : 1, "name" : "10.0.0.57:27017", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 113, "optime" : { "ts" : Timestamp(1634711061, 1), "t" : NumberLong(1) }, "optimeDurable" : { "ts" : Timestamp(1634711061, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2021-10-20T06:24:21Z"), "optimeDurableDate" : ISODate("2021-10-20T06:24:21Z"), "lastHeartbeat" : ISODate("2021-10-20T06:24:22.487Z"), "lastHeartbeatRecv" : ISODate("2021-10-20T06:24:22.906Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "", "syncSourceHost" : "10.0.0.56:27017", "syncSourceId" : 0, "infoMessage" : "", "configVersion" : 1, "configTerm" : 1 }, { "_id" : 2, "name" : "10.0.0.58:27017", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 113, "optime" : { "ts" : Timestamp(1634711062, 1), "t" : NumberLong(1) }, "optimeDurable" : { "ts" : Timestamp(1634711062, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2021-10-20T06:24:22Z"), "optimeDurableDate" : ISODate("2021-10-20T06:24:22Z"), "lastHeartbeat" : ISODate("2021-10-20T06:24:23.495Z"), "lastHeartbeatRecv" : ISODate("2021-10-20T06:24:22.514Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "", "syncSourceHost" : "10.0.0.56:27017", "syncSourceId" : 0, "infoMessage" : "", "configVersion" : 1, "configTerm" : 1 } ], "ok" : 1, "$gleStats" : { "lastOpTime" : Timestamp(1634710950, 1), "electionId" : ObjectId("7fffffff0000000000000001") }, "lastCommittedOpTime" : Timestamp(1634711063, 1), "$clusterTime" : { "clusterTime" : Timestamp(1634711063, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } }, "operationTime" : Timestamp(1634711063, 1) } 5.配置部署shard1分片集群,启动shard1实例(三台执行同样操作)

提示:

本文由神整理自网络,如有侵权请联系本站删除!

本站声明:

1、本站所有资源均来源于互联网,不保证100%完整、不提供任何技术支持;

2、本站所发布的文章以及附件仅限用于学习和研究目的;不得将用于商业或者非法用途;否则由此产生的法律后果,本站概不负责!

本站声明:

1、本站所有资源均来源于互联网,不保证100%完整、不提供任何技术支持;

2、本站所发布的文章以及附件仅限用于学习和研究目的;不得将用于商业或者非法用途;否则由此产生的法律后果,本站概不负责!

相关内容

- 阿里云服务器部署mongodb的详细过程_MongoDB_

- mongodb数据库迁移变更的解决方案_MongoDB_

- 批量备份还原导入与导出MongoDB数据方式_MongoDB_

- SpringBoot整合redis及mongodb的详细过程_MongoDB_

- 在mac系统下安装与配置mongoDB数据库_MongoDB_

- Mongodb 如何将时间戳转换为年月日日期_MongoDB_

- 关于对MongoDB索引的一些简单理解_MongoDB_

- Vercel+MongoDB Atlas部署详细指南_MongoDB_

- MongoDB日志切割的三种方式总结_MongoDB_

- MongoDB慢查询与索引实例详解_MongoDB_

点击排行

本栏推荐